Summary Task 2

1. Description of the Problem

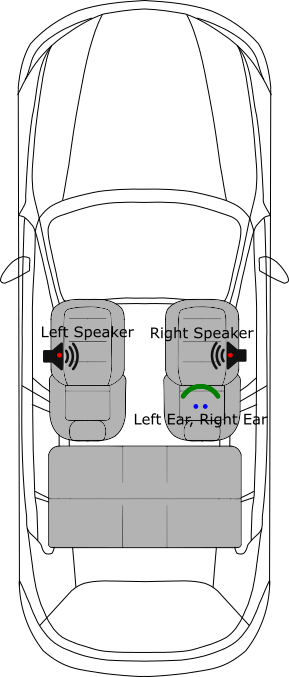

A person with hearing loss is wearing their hearing aids and sitting in a car. They're listening to recorded music played over the car stereo (see Figure [1]).

Your task is to process the music played from the stereo to improve the audio quality allowing for the presence of the car noise.

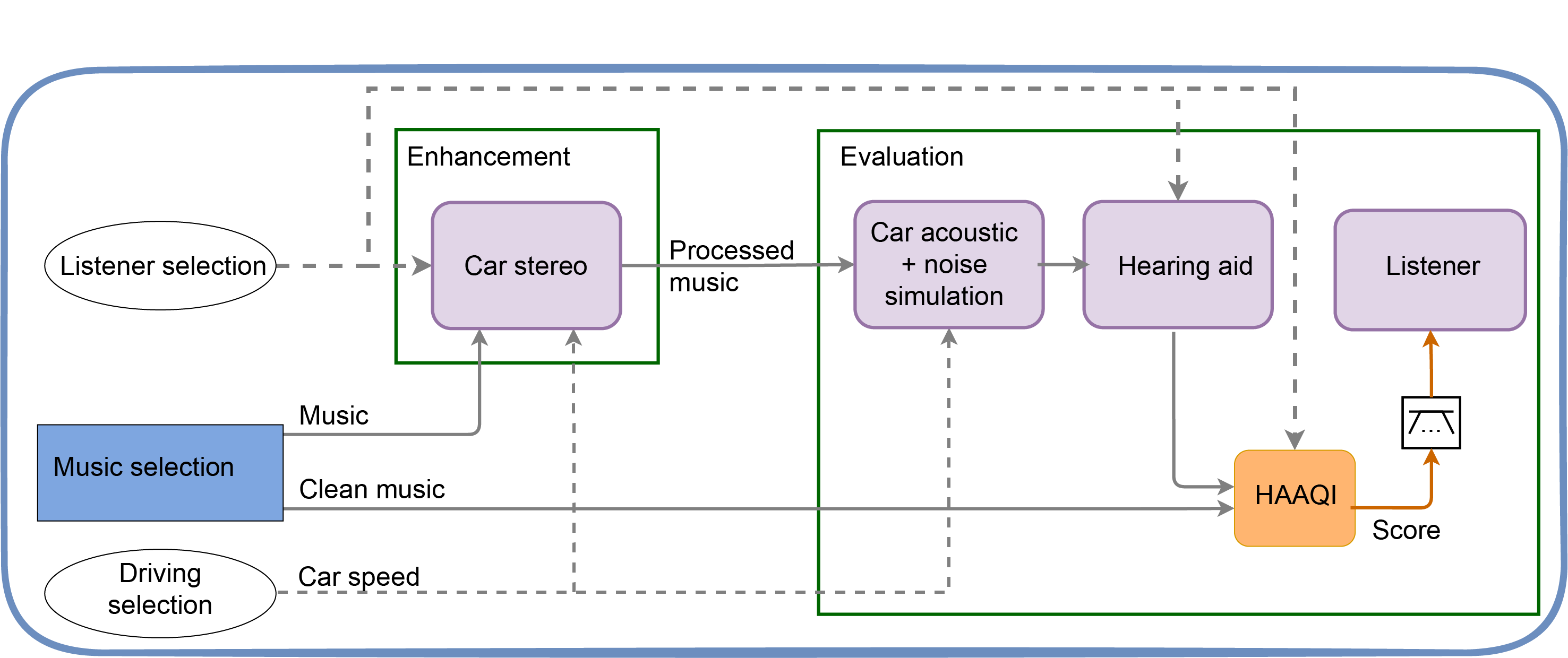

As shown in Figure [2], the system is split into two stages; the enhancement and evaluation.

1.1 Enhancement Stage

You can adapt and modify the baseline enhancement script or make your own script.

Your task is to process the music in such a way that improves the reproduced quality of the music. For this, you have access to the car speed and other metadata, which gives an estimation of the power spectrum of the noise. You don't have the noise signal itself, so this is not a noise cancellation task.

1.1.1 Dataset

In the enhancement stage, you have access to:

- A music dataset containing 5600 30-second excepts of samples from 8 music genres.

- Metadata of:

- Listener Characteristics (audiograms) - see listener Data

- Car speed and metadata. This is very important as it provides information to approximately estimate the noise spectrum.

- SNR at the hearing aids. This tells you how loud the car noise relative to the music.

The SNR at the hearing aids microphone information is an SNR relative to the music. This means that simply increasing the music level will result in an increment of the noise level.

Please refer to task 2 data page and the baseline readme for details.

To download the datasets, please visit download data and software.

1.1.2 Output

The output of this stage is one stereo signal:

- Sample rate = 32 kHz

- Precision: 16-bit integer

- Compressed using FLAC

For more details about the format of the submission, please refer to submission webpage.

The responsibility for the final signal level is yours. It’s worth bearing in mind that should your signals overall seem too loud to be comfortable to someone in the listening panel, they may well turn down the volume. Also, there may be clipping in the evaluation block if the processed signals are too large.

1.2 Evaluation Stage

You are not allowed to change the evaluation script provided in the baseline. Your output signals with be scored using this script.

The evaluation stage is a common for all submissions.

As shown in Figure [2], the evaluation takes the reference music signal. Note that, in this figure, the

Music and the Clean Music are the same signal but are show in separate lines for illustration purposes.

In this stage, both the enhanced and the reference signal are processed before the HAAQI evaluation. See Core Software.

1.2.1 Process on the enhanced signal.

- Generate car noise based on the parameters from the metadata.

- Apply anechoic HRTFs to the noise.

- Apply car HRTFs to the enhanced signal.

- Scale the noise to match the SNR ar hearing aids

- Add both signal

1.2.2 Process on the reference signal.

- Add anechoic room impulses.

To learn more about HAAQI, please refer to our Learning Resources and to our Python HAAQI implementation.

The output of the evaluation stage is a CSV file with all the HAAQI scores.

2. Software

All the necessary software to run the recipes and make your own submission is available on our Clarity-Cadenza GitHub repository.

The official code for the first challenge was released on version v0.3.4.

To avoid any conflict, we highly recommend for you to work using version v0.3.4 and

not with the code from the main branch. To install this versions you can:

-

Download the files of the release v0.3.4 from: https://github.com/claritychallenge/clarity/releases/tag/v0.3.4

-

Clone the repository and checkout version v0.3.4

git clone https://github.com/claritychallenge/clarity.git

git checkout tags/v0.3.4

- Install pyclarity from PyPI as:

pip install pyclarity==0.3.4

3. Baseline

In the Clarity/Cadenza GitHub repository, we provide a baseline system. Please, visit the baseline on the GitHub webpage and Baseline link to read more about the baseline and learn how to run it.